Ragged Hierarchies are a common BI reporting structure in which the hierarchy contains a variable number of levels. Examples are an organization hierarchy, or a financial chart of accounts. Ragged hierarchies are cumbersome to navigate using standard SQL. But there are ways to simplify the navigation without compromising performance.

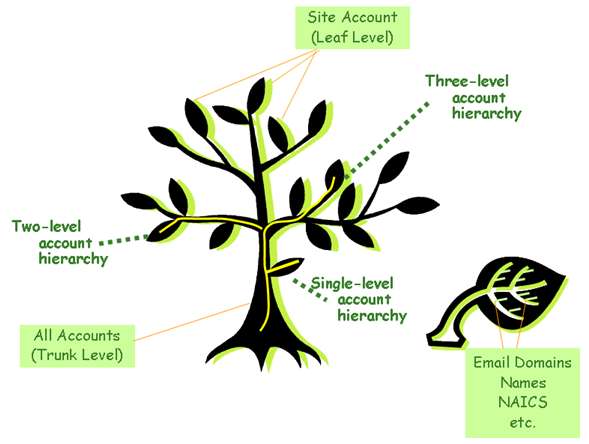

It is useful to employ a “tree” metaphor to visualize the modeling challenges of dimensional hierarchies. A “tree” is a collection of reporting hierarchies such as may exist in a product dimension or an organization dimension. A tree consists of “branches” that represent the levels of a hierarchy. Each branch contains one or more “nodes”. A node can be a “leaf” or another branch. A leaf is the most granular node of a hierarchy, and is referred to as a “leaf node”. Each leaf node contains the values of the various attributes of the dimension. The set of all nodes emanating from a given branch node is referred to as a “sub-tree”. A single “hierarchy” is the set of connected nodes between a given node and the trunk. The “trunk” represents the collection of all hierarchies in the dimensional tree.

A “ragged hierarchy” exists when the number of levels varies between hierarchies. Figure 1 depicts an Account dimension with ragged hierarchies. Three hierarchies are highlighted including a single, double, and triple level hierarchy.

Recursive Hierarchies

Hierarchies are often modeled using “parent keys” in the dimension table. A dimension exhibits recursive hierarchies when there can be two or more levels of levels of parent-child relationships in the same table. Dynamically navigating recursive hierarchies with a BI tool like Cognos or Business Objects is expensive. Each level requires a self-join. There are two basic types of dimension recursive hierarchies.

Fixed Recursive Hierarchies

If the number of levels in the hierarchy is fixed, then the dimension table contains fixed recursive hierarchies. An example is a standard product or merchandise hierarchies (e.g. family > class > commodity > category > item). Because the number of levels is known, the dimensions can be modeled with parent key column for each level of the hierarchy. However, this does not necessarily eliminate the need for recursive joins at query time.

Dynamic Recursive Hierarchies

If the number of levels in the hierarchy is indeterminate, then you have a ragged recursive hierarchy. Project and organization hierarchies are typically ragged. For example, a project may have one or more sub-projects, each of which could have its own sub-projects.

Left outer joins are required to navigate each level of a recursive hierarchy. If the dimensions are large and there are many levels in the hierarchy, performance could become an issue. It is not always possible to optimize recursive joins because the parent-key column functions as both a parent and a child. You cannot co-locate the parent and child rows on the same Netezza SPU. In databases like Oracle that use indexes, separately indexing the primary key and the parent key may offer some performance relief, but performance will still degrade as the number of levels (and therefore joins) increases.

Hierarchy Navigation Tables

A ragged hierarchy is simplified by creating a hierarchy navigation table in the database. Navigation tables can be directly queried by the BI tool without expensive recursive joins. Navigation tables can be optimized because the normal table joins are predictable, few in number, can be inner joined, and can be co-located with the main dimension table. There are two basic types of hierarchy navigation tables.

Vertical Navigation Tables

Vertical navigation tables contain a row for every level of every hierarchy. Thus, the leaf node is repeated for each level represented in its hierarchy. If a user wanted to roll-up costs to a mid-level node in the project hierarchy, they would simply select that node, and the navigation table would make a single join to the fact table, regardless of the number of levels in the hierarchy. Vertical navigation tables are useful when the user does not know the level the hierarchy they are querying.

Horizontal Navigation Tables

Horizontal (i.e., flattened) navigation table contain one row per hierarchy. The levels of the hierarchy are represented as columns. Thus, a horizontal navigation table will have fewer rows than a vertical navigation table for the same dimension. Horizontal navigation tables are useful when the user is selecting a specific level, or needs to display the parent path levels in a query or report.

Ragged Hierarchy Special Requirements

There can be variations in requirements within the general theme of ragged hierarchies. As usual, the devil is in the details. Specific modeling techniques to achieve optimum performance depend on the customer’s unique requirements. Examples of special hierarchy scenarios are described below.

Multiple Parent Nodes

This scenario breaks the strict hierarchical relationship of the dimension. A node can have 2 or more parents. For example, a project can be a member of two different “programs” at the same time. This requirement presents unique roll-up challenges.

Facts Attached to Multiple Levels

This scenario often exists in organizational hierarchies. Each organization has a budget and is responsible for the budgets of the organizations within its span of control. For example, Division A is responsible for the budges of Departments 1, 2 and 3. Department 1 is responsible for Units 1.a and 1.b. Thus, expense roll-up queries might include costs of the Division A sub-tree including, or excluding, the Division A’s own expenses.

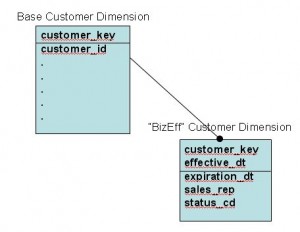

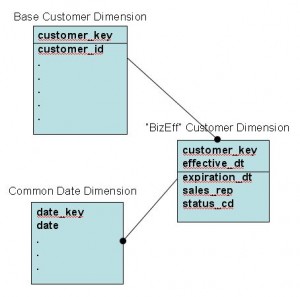

Versioned Hierarchies

Versioned hierarchies exist when the relationships of a hierarchy changes over time, and there is a requirement to report based on the hierarchy structure in effect as-of a point in time. For example, customer ownership relationships may change over time as a result of mergers and acquisitions.

Approach to Optimizing Ragged Hierarchies

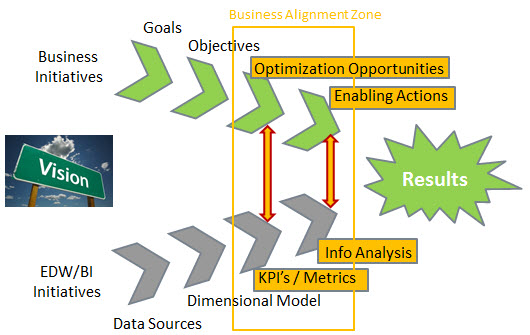

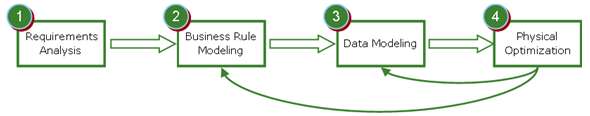

Optimizing Ragged Hierarchies is a four-step processes as depicted in Figure 2.

- Requirements Analysis – The first step to optimizing ragged hierarchies is to identify the business requirements. What is the general model and what are the special requirements? The requirements analysis will narrow down the optimization options.

- Business Rule Modeling– The second step is to allocate business rule processing between the BI query tool, the database structures, and the ETL application. The choices will depend on the query use cases, the capabilities of the BI tool, and service level agreements (SLAs) related to query response times and available ETL batch window times.

- Data Modeling – The third step involves modeling the dimensions, navigation tables, and the database tables and views required to support the loading and navigation of ragged hierarchies.

- Physical Optimization – The fourth step is to optimize the physical database structures based on performance tests. The results will depend on a variety of factors including data volumes, hierarchy versioning requirements, the distribution of data, the frequency of updating the hierarchies, and the user query profiles. Physical optimization may lead to adjustments in steps 2 and 3.

***********

In summary, ragged hierarchies are a fact of life in the ragged real world. Hopefully the techniques in this post will help you smooth things over with your users 🙂 I would love to hear your feedback on these and other approaches for handling ragged hierarchies.